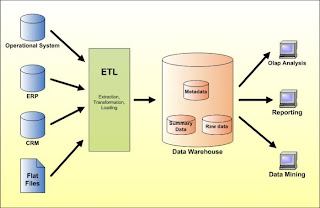

The above Architecture is taken from the www.databaseanswers.com . We would recommend you to visit this site to get good understanding towards data modeling.

Now we are goanna define each and every terminology in the above picture to facilitate better understanding of the subject.

1. Operational Data Store : is a database designed to integrate data from multiple sources for additional operations on the data. The data is then passed back to operational systems for further operations and to the data warehouse for reporting.

2. ERP : Enterprise resource planning integrates internal and external management information across an entire organization, embracing finance/accounting, manufacturing, sales and service, etc.

Its purpose is to facilitate the flow of information between all business functions inside the boundaries of the organization and manage the connections to outside stakeholders.

3. CRM : Customer relationship management is a widely-implemented strategy for managing a company’s interactions with customers, clients and sales prospects. It involves using technology to organize, automate, and synchronize business processes—principally sales activities, but also those for marketing, customer service, and technical support.

Customer relationship management describes a company-wide business strategy including customer-interface departments as well as other departments.

4. Flat Files In data Ware Housing : Flat Files Doesn’t Maintain referential Integrity like RDBMS and are Usually seperated by some delimiters like comma and pipes etcs.

Right from Informatica 8.6 unstructured data sources like Ms-word,Email and Pdf can be taken as source.

5. ETL (Extract,Transform, And load) :

is a process in database usage and especially in data warehousing that involves:

• Extracting data from outside sources

• Transforming it to fit operational needs (which can include quality levels)

• Loading it into the end target (database or data warehouse)

6. Data Marts: A data mart (DM) is the access layer of the data warehouse (DW) environment that is used to get data out to the users. The DM is a subset of the DW, usually oriented to a specific business line or team.

For the Definition of the Data Warehouse Please Refer to Introduction to the Data ware Housing.

7. OLAP : OLAP (Online Analytical Processing) is a methodology to provide end users with access to large amounts of data in an intuitive and rapid manner to assist with deductions based on investigative reasoning.

OLAP systems need to:

1. Support the complex analysis requirements of decision-makers,

2. Analyze the data from a number of different perspectives (business dimensions), and

3. Support complex analyses against large input (atomic-level) data sets.

8. OLTP : Online transaction processing, or OLTP, refers to a class of systems that facilitate and manage transaction-oriented applications, typically for data entry and retrieval transaction processing.

9. Data Mining: Is the process of extracting patterns from large data sets by combining methods from statistics and artificial intelligence with database management. Data mining is seen as an increasingly important tool by modern business to transform data into business intelligence giving an informational advantage.